Video event recorders and telematics platforms are advancing rapidly with machine vision and artificial intelligence (AI).

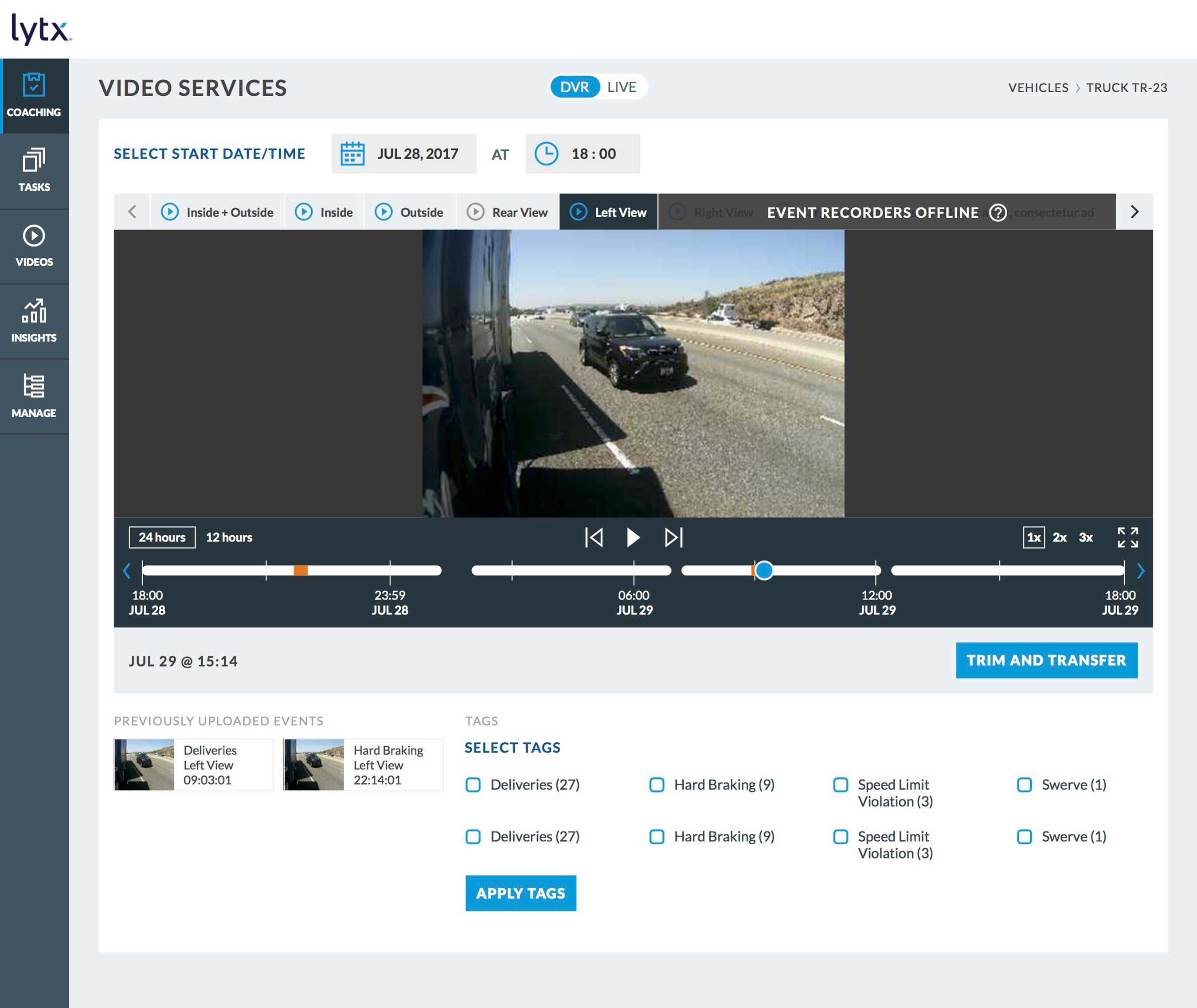

With Lytx Video Service, a fleet manager can capture and review video inside and around vehicles.Early versions of the products — no more than a decade old by now — are limited in comparison to newer versions that use these technologies.

Lytx, a pioneer of video-based driver safety systems, recently shared with CCJ how it uses machine vision and AI to simplify the workflow for fleets while expanding the capabilities of safety and risk management.

Starting out

About eight years ago, Lytx started down a path to use supervised and unsupervised machine learning in its DriveCam platform, explains Brandon Nixon, the company’s chief executive officer.

Machine learning starts onboard the vehicle with edge computing devices that detect “trigger” events. Algorithms in these devices constantly monitor streaming video and data from integrated cameras (machine vision) as well as from the vehicle databus and sensors.

Examples of basic trigger events include rapid deceleration and speeding. When these and more complex events occur, the devices capture and transmit video and data event files to servers in the cloud.

Supervised learning starts in Lytx’s 24/7 Review Center. The event files are reviewed by behavior analysts who verify the algorithms “tagged” the video and event data properly. Analysts can correct or add tags, as needed, to the metadata based on their observations of the root causes of risk.

The event files and metadata then move upstream to an AI engine in the cloud. This is where unsupervised machine learning takes place, automatically, using a statistical process called clustering and other AI methods. The learning process involves use of a vast database of driving events and variables that include location, time of day and vehicle type.

The machine learning process comes full circle by updating the algorithms in the edge devices over the air. Machine vision can then automatically, and more accurately, identify more trigger events and risky behaviors.

“You can’t have a human reviewing all that video, all of the time, but you can certainly have a machine doing it,” says Nixon of machine learning process. “A machine never gets tired and never has to go on a break.”

ActiveVision

Two years ago, Lytx released an optional service called ActiveVision that layers on the DriveCam platform. The service uses machine vision to detect additional patterns of risk caused by lane departures, distracted driving and unsafe following distances.

Lytx ActiveVision uses machine learning to detect behaviors due to fatigue and distracted driving.Lytx identified the patterns over time with its machine learning process. For example, one pattern of distraction is when a driver has two or three consecutive swerves in a traffic lane, Nixon says. This pattern can be more accurately detected by combing machine vision from a driver-facing camera to determine where the driver was looking when the swerving occurred.

Overall, comparing the results of ActiveVision verses the stand-alone DriveCam platform shows that ActiveVision identifies:

- 60 percent more lane departures and the root causes behind them

- Up to 24 percent more drivers who are driving while distracted

- Up to 65 percent more events where the driver is identified as following too closely, and the root causes behind them.

CCJ’s sister publication, Overdrive, recently interviewed Brian Kohlwes, safety vice president and chief counsel for East Dubuque, Illinois-based refrigerated hauler Hirschbach Motor Lines. He calls the ActiveVision add-on platform a “game-changer” regarding fatigue.

With its ability to detect lane departure, track speed relative to traffic and capture video of the driver, “it allows us to be proactive” on detecting unsafe behavior, Kohlwes says. Accidents have been much less frequent at the 950-truck fleet since adopting ActiveVision in December.

Refining the process

As an example of how the machine learning process yields better results and more automation over time, Nixon uses a distracted driving event that occurs in a construction zone. During the video review process, an analyst observes the driver pass a construction area on the right-hand side of the road. The driver looks right and then swerved to the left to get around the construction.

Initially, such an event would be tagged as distracted driving. Through the supervised and unsupervised machine learning process, a pattern emerges as similar events occur at the same location. The algorithms onboard the devices are updated so that the machine vision more accurately determines whether or not a safety exception occurs given the circumstances.

Lytx Video Services is an optional offering to capture and retrieve video from multiple cameras around the vehicle.

Lytx Video Services is an optional offering to capture and retrieve video from multiple cameras around the vehicle.

Unsupervised machine learning can also take place immediately. As an example, Nixon says ActiveVision can identify when drivers have a “rolling stop” event. Its machine vision algorithms compare the location of the vehicle with map data on intersections with stop signs and traffic signals.

The machine vision can also recognize a stop sign or traffic signal with real-time video data. By combining the data sources, the system is able to immediately detect if a driver fails to come to a stop at an intersection before turning.

Volume matters

Lytx is accelerating its machine learning through its large and growing data set, Nixon says. The company now has ActiveVision outfitted on more than 50,000 vehicles. Its DriveCam platform is installed in more than 400,000 vehicles.

With all of these connected vehicles, Lytx has more than 70 billion driving miles in its database, which is growing at a rate of one billion miles every two weeks, he says.

Lytx is applying the same machine learning process to Lytx Video Services (LVS), the latest enhancement to the DriveCam program that offers an always-on capability for video retrieval with side-view and other expandable options for a full view around the vehicle.

Lytx will be able to use machine learning when multiple cameras are installed around a vehicle for exception-based reporting and analytics of video clip recordings.

Creating machine vision for LVS might be of interest to a final-mile delivery fleet, for instance. A camera could record the condition of its packages upon delivery, and verify the driver completed the delivery.

Other companies might want to install cameras at the rear of their vehicles to record loading and unloading activities. Over time, machine learning could lead to an automated alert if a driver takes a package down a ramp walking backwards instead of forward, for example.

Similarly, utility companies may want machine vision with LVS to identify if their employees are not strapped in and wearing hard hats when operating a bucket truck.

“Machine vision and AI can detect and learn that,” he says. “The latency built into the system opens us up to do anything and to learn anything.”

Fleet managers use an online portal from Lytx to review clips of the events along with various reporting and analytical tools that are part of a refined workflow process for coaching drivers.

Note: This article was written by Aaron Huff, senior editor at Commercial Carrier Journal, a partner publication of Hard Working Trucks.